Journal of Hepatology

Volume 58, Issue 4 , Pages 811-820, April 2013

Javier Briceño, Ruben Ciria, Manuel de la Mata

Received 25 July 2012; received in revised form 17 September 2012; accepted 13 October 2012. published online 25 October 2012

Summary

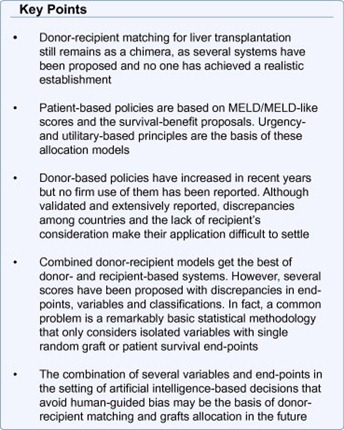

Liver transplant outcomes keep improving, with refinements of surgical technique, immunosuppression and post-transplant care. However, these excellent results and the limited number of organs available have led to an increasing number of potential recipients with end-stage liver disease worldwide. Deaths on waiting lists have led liver transplant teams maximize every organ offered and used in terms of pre and post-transplant benefit. Donor-recipient (D-R) matching could be defined as the technique to check D-R pairs adequately associated by the presence of the constituents of some patterns from donor and patient variables. D-R matching has been strongly analysed and policies in donor allocation have tried to maximize organ utilization whilst still protecting individual interests. However, D-R matching has been written through trial and error and the development of each new score has been followed by strong discrepancies and controversies. Current allocation systems are based on isolated or combined donor or recipient characteristics. This review intends to analyze current knowledge about D-R matching methods, focusing on three main categories: patient-based policies, donor-based policies and combined donor–recipient systems. All of them lay on three mainstays that support three different concepts of D-R matching: prioritarianism (favouring the worst-off), utilitarianism (maximising total benefit) and social benefit (cost-effectiveness). All of them, with their pros and cons, offer an exciting controversial topic to be discussed. All of them together define D-R matching today, turning into myth what we considered a reality in the past.

Abbreviations: D-R, donor-recipient, UNOS, United Network of Organ Sharing, MELD, Model for End Stage Liver Disease, SBE, Symptom-based exceptions, INR, International Normalized Ratio, LT, Liver transplantation, ECD, Extended criteria donors, SB, Survival benefit, CIT, Cold ischemia time, DRI, Donor risk index, DCD, Donation after cardiac death, SRTR, Scientific Registry of Transplant Recipients, PGF, Primary graft failure, SOLD, Score Of Liver Donor, SOFT, Survival Outcomes Following Liver Transplant, ROC, Receiver operating curves, BAR, Balance of risk score, HCC, Hepatocellular carcinoma, HCV, Hepatitis C virus

Keywords: Liver, Transplantation, Donor-recipient, Matching, Outcomes, Allocation

Introduction

Liver allocation policies have been staged by precise strategies to turn arbitrary criteria into well-established and objective models of prioritization. The fast onset of this turnover has led to the coexistence of different models and metrics, with their pros and cons, with their goodness and boundaries, with their dogmas and fashions; in short, with their myths and realities.

Liver transplant (LT) outcomes have improved over the past two decades. Unfortunately, with an increasing number of individuals with end-stage liver disease and a limited number of organs to afford this demand, this growing discrepancy has addressed the dismal scenario of waitlist deaths [1]. Moreover, the use of less stringent selection criteria to expand the donor pool has evidenced the importance of recipient and donor factors on transplant outcomes [2].

Donor-recipient (D-R) matching has been strongly analysed and policies in donor allocation have tried to maximize organ utilization whilst still protecting individual patient interests. However, D-R matching has been written through trial and error, with early baseline weak rules [3] which have changed continuously. Several analyses and over-analyses of databases have yielded non-uniform donor and/or recipient selection criteria to make an appropriate D-R matching. In the late 1990s, traditional regression models, estimating the average association of one factor with another, were used [4]. Consequently, an independent association could be demonstrated, whilst adjusting for other confounding factors. However, this was a simplistic approach when lots of donor and recipient variables were considered [5], [6], [7]. Subsequent complexity with stratified models was more realistic, and very useful scores have been depicted with this approach [8]. However, the increasing expectancy with the development of each new score has been followed by strong discrepancies and controversies [9], [10].

Match is defined as “a pair suitably associated” [11]. D-R matching could be defined as “the technique to check D-R pairs adequately associated by the presence of the constituents of some patterns from donor and patient variables”. This definition, however, lacks of purpose. Possible purposes can be graft survival, patient survival, waitlist survival, benefit survival and evidence-based survival; furthermore, all of them can be tabulated in terms of transparency, individual and/or social justice, population utility and overall equity [10]. D-R matching combines a donor acceptance policy and an allocation policy to get advantages (i.e., survival) over a random, experimental or subjective interpretation in terms of better precision. In some circumstances, D-R matching means a higher exactness. Current allocation systems are based on isolated or combined donor or recipient characteristics (Fig. 1). We will focus this review considering patient characteristics-based systems, donor risks-based systems, and combined D-R-based systems (Table 1).

Fig. 1. Current composite formulations of donor-risk-based systems (left), patient-risk-based systems (right) and combined donor-recipient-based systems (middle) for donor-recipient matching available in literature. COD (cause of death), CVA (cardiovascular accident), DCDD (donation after circulatory determination of death) and PVT (portal vein thrombosis).

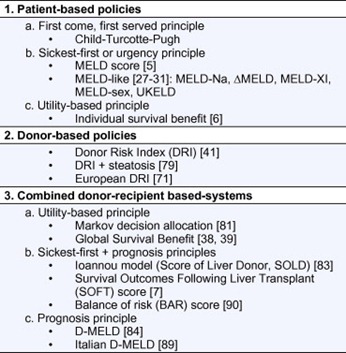

Table 1. Classification of allocation models useful for donor-recipient matching.

Patient-based policies

Urgency principle: MELD/MELD-like

In the early years of LT, allocation was a clinician-guided decision. Time on waiting list became the major determinant to receive a graft, but this allocation system engendered an unacceptable number of inequities for many candidate subsets and profound regional and centre differences. In this context, a score named MELD [12], the acronym of the Model for End Stage Liver Disease, became a metric by which the severity of liver disease could be accurately described. Moreover, listed candidates could be ranked by the risk of waiting list mortality independently of time on it (medical urgency) [5]. UNOS made several changes to the calculation of MELD score [13]. These adjustments finished in a continuous score, representing the lowest and the highest probability of 3-month waitlist mortality. As the model is based on purely objective laboratory variables, a potentially transparent and independent-of-observer opinion method has been implemented in the United States since 2002, and soon worldwide [14], [15], [16], [17].

The strengths of MELD are its significant contribution to reducing mortality on the waiting list [13], [18], a reduction of the number of futile listing, a decrease in median waiting time to LT, and, finally, an increase in mean MELD score in patients who underwent transplantation after MELD implementation [10].

Unfortunately, the initial enthusiasm with MELD was followed by some caveats and objections that weaken its solidity: First, although the empirical adjustments by UNOS in calculating the MELD score have some rationale, they were not based on validated studies [13], [19]. Second, the components of the MELD formula (creatinine, bilirubin and INR) are not so objective due to interlaboratory variability [9], [20], [21], with a reported risk of “gaming” the system by choosing one laboratory or another [22]. Third, MELD is only useful for most of non-urgent cirrhotic patients. However, growing indications as hepatocellular carcinoma and symptom-based exceptions (SBE) are all mis-scored by MELD, leaving half of the patients inadequately scored, and with the assignment of priority extra-points which have been arbitrarily up and downregulated [17], [23], [24], [25]. Fourth, extreme MELD values may be considered exceptions as well, i.e., the highest MELD values (>40) [26] and lower MELD patients with cirrhosis and hyponatremia. A trend to substitute the classic MELD score by a more comprehensive MELD-sodium score is under debate [27]. Together with this MELD-Na score, a myriad of metrics based on MELD improvements according to particular situations have been depicted: delta-MELD [28]; MELD-XI [29]; and MELD-gender [30]. The UKELD score is the equivalent to MELD in United Kingdom [31]. None of these variations has reached enough importance to replace the original MELD score [32].

D-R matching occurs at the time of organ procurement. However, because MELD score obviates donor characteristics, the assignment of a donor to the first sickest listed patient cannot be considered a true D-R matching. Therefore, in a MELD-based allocation policy, a concrete D-R combination does not necessarily mean the best combination in terms of outcome. One of the drawbacks of the MELD score is the impossibility of donor selection. This is more evident in patients with similar MELD score, as a similar punctuation would not mean equal outcomes, especially with the growing trends in extended criteria donors (ECD) use. MELD score may correctly stratify patients according to their level of sickness, but D-R pairs are not well categorized in accordance with the net benefit of their combinations. MELD was not designed for D-R matching and therefore it is a suboptimal tool for this aim.

Utility-based principle (survival benefit)

MELD lacks of utility at predicting post-transplant outcomes. Several studies have shown the poor correlation between pretransplant disease severity and post-transplant outcome [13], [33], [34], [35], [36]. An allocation policy based on the utility principle would give priority to candidates with better outcomes, avoiding emphasis on waitlist mortality.

Considering both waitlist mortality (urgency principle) and post-transplant mortality (utility principle), a benefit-of-survival concept has been recently identified [6]. Survival benefit (SB) computes the difference between the mean lifetime with and without an LT. This new allocation system seeks to minimize futile LT, giving first concern to patients with a predicted best lifetime gained due to transplantation [37]. Under an SB model, an allocated graft goes to the patient with the greatest difference between the predicted post-transplant lifetime and the predicted waiting list lifetime for this specific donor [38], [39].

In the first conception, Merion [6] analyzed post-transplant mortality risk (with transplant) and waiting list mortality risk (without transplant). This early analysis left some important concepts: (1) LT provides an overall advantage over remaining on waiting list. (2) Survival benefit increases with a raising MELD score. (3) Consequently, there is a set of listed patients who would not benefit from LT and they preferentially may be crossed out the list. The threshold for a benefit survival with transplantation is a MELD score of about 15 [40].

This initial report did not consider the impact of donor quality. Another important concern was the shortness of a maximum of one year of post-transplant follow-up for estimated SB. Schaubel et al. [39] improved the SB assertions including sequential stratifications of donor risk index (DRI) [41]. They estimated SB according to cross-classifications of candidate MELD score and DRI. The conclusions of this study included: (1) in terms of SB, candidates with different MELD scores show different benefit according to DRI. Whilst higher-MELD patients have a significant SB from transplantation, regardless of DRI, lower-MELD candidates who receive higher-DRI organs also experience higher mortality and they do not demonstrate significant SB. (2) High-DRI organs are more often transplanted into lower-MELD recipients and vice versa. This unintended consequence of the MELD allocation policy tries to avoid the addition of risks from recipients and donors, but is accompanied by a consequent decrease in post-transplant survival [42].

Current contributions to the SB allocation scheme are a myriad of complex mathematical refinements [40]. An SB now represents the balance between 5-year waiting list mortality and post-transplant mortality, globally combining patient and donor characteristics. Probably the most important learning of the current SB score is not only the individual but also a collective benefit: the maximum gain to the patient population as a whole will occur if the patient with the greatest benefit score receives the organ. In this sense, the benefit-principle also means an utilitarian principle [43].

Allocation of organs in LT has gone through three categories: from a “first-come, first-served” principle (waiting-time) [44] to a “favouring the worst-off or prioritarianism” principle (sickest first) [45], and, recently, to a “maximising total benefits or utilitarianism” principle (survival benefit). The last one combines two simple principles: “number of lives saved” [46] principle and “prognosis or life-years saved” [47] principle. Perhaps the most considerable advantage of a SB model is its ability to consider prognosis. Rather than saving the most lives, SB aims to save the most life-years. Living more years and saving more years are both valuable. In this sense, saving 2000 life-years per-year is attractive [39]. However, three main considerations must be made: first, SB favours acutely ill patients irrespective of donor quality, because the most life-years saved occur in higher-MELD patients. Waitlist mortality risk considerably outweighs post-transplant mortality in higher-MELD candidates; however, healthier candidates, even with lower DRI organs, are penalized because liver transplant in this group is more hazardous than remaining on the waiting list. Giving many life-years to a few (highest-SB patients) differs from giving a few life-years to many (lowest-SB patients) [47]. Survival benefit is undeniably valuable but insufficient alone [43]. Second, the LT-SB principle is not a pure “maximising total benefits” principle. It was elaborated from the MELD-score teachings and that is why the caveats of MELD score have been included in the SB scheme. Recently, Schaubel et al. [39] tried to obviate this problem by incorporating 13 candidate parameters (not only the three of MELD) and MELD exceptions. This situation becomes more troubling when the authors compute the post-transplant survival model by multiplying the hazard ratio of a given recipient by the hazard ratio of a given donor. This is biased because it assumes the independency of the D-R hazards and an absence of interactions between cross-sections of D-R pairs [48]. Third, the survival-benefit-based allocation fails to identify recipient age in the proposed benefit score. Even when age predicts both pre and post-transplant survival, LT benefit does not differ too much along the spectrum of 20–70![]() years old patients. According to ethics and morality, saving life-years for old-sickest patients may be not equal for younger people who have not yet lived a complete life and will be unlikely to do so without LT. The SB score lacks of distributive justice [43], [49].

years old patients. According to ethics and morality, saving life-years for old-sickest patients may be not equal for younger people who have not yet lived a complete life and will be unlikely to do so without LT. The SB score lacks of distributive justice [43], [49].

Donor-based policies

The growing discrepancy between demand and supply is at the forefront of current dilemmas in LT. Two decades ago, the need to achieve coherent outcomes with this new therapy, led clinicians to select top-quality liver donors. The increase of both listed patients and waiting list mortality led to the expansion of acceptance criteria [1], [50], [51], [52]. Progressively, the qualitative effect of individual donor variables became understood. The terms “marginal” or “suboptimal” donors gave way to the current terminology of “extended criteria donors” [53]. As clinicians have learnt to see ECD with eyes wide-open, low-quality donors have become a real practice to expand the donor pool. Donors are generally considered “extended” if there is a risk of primary non-function or initial poor function, although those that may cause late graft loss may be included [54].

Traditionally, liver donors have been considered “bad” or “good” if an extended criterion was present or not, respectively [55]. However, some concerns must be highlighted: first, each expanded variable has an evolutive and subjective “tolerance threshold”. A 50-year old donor in the 90s was considered as risky as an 80-year old one, nowadays [56], [57], [58]. Second, even with ECD, graft and/or patient outcomes may not necessarily be so bad, as graft survival depends on several factors [59], [60]. Moreover, ECD may work well in high-risk recipients [38]. Third, some extended criteria can act in combination. Liver grafts from elderly donors and/or donors with steatosis are even more affected by prolonged cold ischemia time (CIT) and preservation injury [61], [62]. Actually, the accumulation of ECD variables influences graft survival in a MELD-based allocation system for LT [63].

Feng et al. [41] discuss the concept of the donor risk index (DRI). DRI objectively assesses donor variables that affect transplant outcomes: donor age, donation after cardiac death (DCD) and split/partial grafts are strongly associated with graft failure; African-American race, low-height and cerebrovascular accident as the cause of death are modestly associated with graft failure. All together with two transplant factors, CIT and sharing outside of the local donor area, configure a quantitative, objective and continuous metric of liver quality based on factors recoverable at the time of an offer. Donor quality represents an easily computed continuum of risk [55]. Unlike simplistic previous scores for donor risk assessment [64], [65], [66], [67], DRI offers a single value for each donor and allows the possibility to compare outcomes in clinical trials and practice guidelines. DRI has been validated in the United States and recently, in Europe [68], [69], [70], [71]. Perhaps the most important contribution of DRI is to give formal consideration to variables that previously were just intuitive [53]. However, although useful and with significant differences within strata, several discrepancies between DRI indexes in Europe against the United States have been reported [71] thus limiting its universal applicability.

DRI offers a rationale for an evidence-based D-R matching. However, considering DRI at the offer time, D-R matching is not easily scheduled and the distribution of risks from donor and recipients depends on the allocation scheme. Two main possibilities of matching are: (1) synergism-of-risks matching, where a high-risk-for-high-risk policy matches high-DRI donors to high-MELD patients, or (2) division-of-risks matching, where a high-risk-for-low-risk (and vice versa) policy matches high-DRI donors to low-MELD patients (and vice versa). Again, the allocation principle dictaminates which of the previous policies may be adopted (Table 2): (a) under a sickest-first-based allocation, the trend has been a division-of-risks matching. Because the sickest candidate may presumably have a difficult postoperative course, a wise criterion would be to discard a high-DRI organ (with its additive worsening of graft survival) [39], [42], [68], [70], [71], [72]. (b) Under an SB allocation system, where the main goal is the maximization of utility, a synergism-of-risks matching must prevail [73]. In their first analysis, Schaubel et al. [38] showed a significantly higher risk to stable patients; however, grafts from ECD increase the chance for long-term survival for patients at high risk of dying of their liver disease. As Henri Bismuth stated, “the highest risk for a patient needing a new liver is the risk of never being transplanted” [74]; (c) under a cost-effectiveness allocation system, MELD and DRI interact to synergistically increase the cost of LT. High-DRI increases the cost of transplant at all MELD strata. Moreover, the magnitude of the cost according to organ quality is greater in the high MELD patients, whereas low MELD patients had a minimal rise in cost when receiving ECD. From a conceptual view, a division-of-risks matching is mandatory according to economics [75].

Table 2. Donor-recipient matching according to the allocation scheme and the principle for allocation of scarce liver donors.

Some limitations regarding DRI must be addressed: first, DRI was derived from pre-MELD era data [41]; second, DRI is almost a donor age-related index because age is the most striking variable in its formulation; third, DRI is a hazard model of donor variables known or knowable at the time of procurement (as CIT and graft steatosis). Actually, macrosteatosis was excluded from the analysis even though it has been reported to be an independent risk factor of increased I/R injury and graft survival [76], [77], [78] that could be useful in evaluating donor risk [79]. Finally, it has been argued that DRI is impractical [80] as at the moment of its conception, several variables were not available at the Scientific Registry of Transplant Recipients (SRTR) database. DRI may partially predict the real magnitude of donor quality on transplant outcome. As MELD score, where there is an absence of donor variables for prognosis, DRI lacks of candidate variables. Per se, DRI alone is a suboptimal tool for D-R matching.

Combined donor-recipient based systems (Table 3)

Relatively few studies have attempted to develop comprehensive models for predicting post-transplant survival, none of which have been validated [64], [67]. We have discussed in a previous section the SB model, where candidates with different MELD scores show different benefit respective DRI [38], [39]. Amin et al. described a Markov decision analytic model to estimate post-transplantation survival whilst waiting for a standard donor and survival with immediate ECD transplant [81]. Under a decision-making modelling, transplantation with an available ECD graft should be preferred over waiting for a standard organ for patients with high-MELD scores. At lower MELD scores, the SB depends on the risk of primary graft failure (PGF) associated with the ECD organ. These results support the concept of SB from a theoretical viewpoint. However, the study was based on simulation and the truthfulness of their results rested on unverifiable assumptions (the possibility of recovery from PGF, the rate of retransplantation for PGF, the lack of consideration of late graft failure and the availability of a standard criteria donor liver) [38], [82].

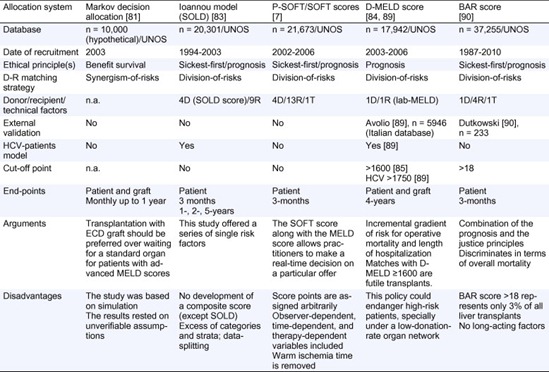

Table 3. Main combined donor-recipient-based systems for D-R matching.

n.a., not available.

Afterwards, Ioannou analysed a pre-MELD era database available from UNOS between 1994 and 2003 (about 30% of all eligible transplants at that period of time) [83]. Four donor and 9 recipient characteristics adequately predicted survival after LT in patients without hepatitis C virus, and a slightly different model was used for patients with hepatitis C virus. The model also described a risk score of the 4 donor variables included in the survival models, which was called Score Of Liver Donor (SOLD). This study only offered a series of single risk factors without the development of a composite score (except for donor criteria). Moreover, an excess of categories and strata of the variables included, and a data-splitting approach made it useless.

An ambitious scoring system that predicts recipient survival following LT deserves full consideration. Rana et al. [7] identified 4 donor, 13 recipient and 1 operative variables as significant predictors of 3-month mortality following LT. Two complementary scoring systems were designed: a preallocation Survival Outcomes Following Liver Transplant (P-SOFT) Score and a SOFT Score which is the result of adding P-SOFT points to points awarded from donor criteria, 1 recipient condition (portal bleed 48-h pretransplant) and two logistical factors (CIT and national allocation) at the time of procurement. Calculations of area under the ROC curves for 3-month survival showed P-SOFT and SOFT score values of 0.69 and 0.70, respectively. These two scores combine the sickest-first principle (MELD was included for regression analysis) and a prognosis principle (3-month mortality), but SB is not considered. Although the SOFT score along with the MELD score would theoretically allow practitioners to make a real-time decision on a particular offer, some concerns must be considered: (1) points were assigned to each risk factor based on its odds ratio (one positive/negative point was awarded to each risk factor for every 10% risk increase/decrease), which seems to be arbitrary; (2) the score includes observer-dependent variables (encephalopathy, ascites pretransplant), time-dependent variables (recipient albumin), or therapy-dependent variables (dialysis prior to transplantation, intensive care unit or admitted to hospital pretransplant, life support pretransplant); (3) the weight of preallocation variables (P-SOFT) is simply added to variables included at the offer time, which is an empirical assumption; (4) warm ischemia time was removed from the SOFT score (OR 2.3) since it cannot be predicted prior to transplantation; (5) the range of points of the groups of risk in the SOFT score is arbitrarily unequal.

There is certainly room for improvement in the high-risk-for-low-risk (and vice versa) policy. Recently, Halldorson et al. [84] have proposed a simple score, D-MELD, combining the best of the sickest-first policy (lab-MELD) and DRI (donor age). The product of these continuous variables would result in an incremental gradient of risk for operative mortality and complications estimated as length of hospitalization. A cut-off D-MELD score of 1600 defines a subgroup of D-R matches with poorer outcomes. The strengths of this system are simplicity, objectivity and transparency. A final rule of D-MELD would be to eliminate matches with D-MELD  1600 (futile transplants). D-MELD is a pure prognosis-allocation system and crashes head-on against the SB principle. A 65-year donor would be refused for a MELD-25 candidate (D-MELD 1625), when this match offers an SB of 2.0 life-years saved approximately [39]. This policy could endanger high-risk patients, especially under a low-donation-rate organ network: the refusal of a >1600 D-MELD match may not be necessarily followed by a favourable match in due time. According to the principle that transplants with 5-year patient survival <50% (5-year PS <50%) [18], [85], [86], [87], [88] should not be performed, in order to avoid organ wasting, a national Italian study has explored potential applications of D-MELD [89]. The cut-off value predicting the 5-year PS <50% was identified in HCV patients only at a D-MELD

1600 (futile transplants). D-MELD is a pure prognosis-allocation system and crashes head-on against the SB principle. A 65-year donor would be refused for a MELD-25 candidate (D-MELD 1625), when this match offers an SB of 2.0 life-years saved approximately [39]. This policy could endanger high-risk patients, especially under a low-donation-rate organ network: the refusal of a >1600 D-MELD match may not be necessarily followed by a favourable match in due time. According to the principle that transplants with 5-year patient survival <50% (5-year PS <50%) [18], [85], [86], [87], [88] should not be performed, in order to avoid organ wasting, a national Italian study has explored potential applications of D-MELD [89]. The cut-off value predicting the 5-year PS <50% was identified in HCV patients only at a D-MELD  1750. For a given match, donor age (ranging 18–80

1750. For a given match, donor age (ranging 18–80![]() years) outweighs MELD score (ranging 3–40). It is paradoxical that the product of MELD score (with a weak ability of predicting posttransplant mortality) and donor age (which influences graft survival) could strongly predict short- and long-term patient outcomes. D-MELD needs fine refinements and tackle ethical challenges before implementation.

years) outweighs MELD score (ranging 3–40). It is paradoxical that the product of MELD score (with a weak ability of predicting posttransplant mortality) and donor age (which influences graft survival) could strongly predict short- and long-term patient outcomes. D-MELD needs fine refinements and tackle ethical challenges before implementation.

A novel score based on a combination of the prognosis and the justice principles has been reported recently. The balance of risk (BAR) score [90] includes six predictors of post-transplant survival: MELD, recipient age, retransplant, life support dependence prior to transplant, donor age and CIT. The strongest predictor of 3-month mortality was recipient MELD score (0–14 of 27 possible scoring points), followed by retransplantation (0–4 of 27 points). The BAR score discriminates in terms of overall mortality below and above a threshold at 18 points. Essentially, the BAR score is a division-of-risks matching system, where MELD (“justice”) is the most contributing factor, even though well-balanced by prognostic recipient and donor factors (“utility”). However, some cautions must be considered: first, maximum BAR score for low-MELD patients (<15) can reach up to 13 points; minimum BAR score for high-MELD patients (>35) would be 14 points. BAR score has an excessive linear correlation with MELD. With a cut-off of 18 points to split patient survival, the BAR score of a high-MELD candidate with two or more risk criteria (i.e., >60-year recipient and >40-year donor) will go above 18 points, and transplantation would be futile. In the UNOS database, BAR score >18 represents only 3% of all LT. Consequently, BAR score is an “all-or-nothing” system more than a “matching” system. Below a score of 18, LT would always be adequate, irrespective of recipient and/or donor factors; second, subsequently, no SB is offered by BAR score; and, third, BAR score was designed for predicting 3-month mortality, which seems poor for long-acting factors.

Matching systems for specific entities

Hepatocellular carcinoma

Hepatocellular carcinoma (HCC) represents one third of the indications for liver transplantation. As these patients may not be adequately scored by MELD, a direct consequence has been award methods with bonus points assigned for them with an extraordinary variability both in American [24], [25] and European centres [15]. The assignment of these awards has not been evidence-based and has been adjusted through trial and error. Single-centre series have reported their individual experiences with self-performed not-evidence-based systems. In these reports, regional monthly updated prioritization enlistments [14] or adjusted MELD scores [91] have been used. Moreover, not only from the recipient side, but also from the donor criteria, HCC recipients have been evaluated, thus advocating for an expanded use of ECD in them, due to the recipient risk of dropout mainly in high-risk HCC [92]. All these series justify their results by achieving equal deaths on waiting list or post-transplant survival ratios. However, global series reflect that the introduction of the MELD allocation system allowing priority scores to patients with limited-stage HCC has resulted in a 6-fold increase in the proportion of liver transplant recipients who have HCC. This is particularly worrying when more than 25% of liver donor organs are currently being allocated to patients with HCC. More interestingly, the supposed equal post-transplantation survival does not appear to be true for patients with tumors 3–5![]() cm in size and globally for all patients with HCC compared to patients without HCC [93]. It seems obvious that individual benefit is contrary to global benefit in the HCC setting. By current modified HCC allocation systems, the requirements of liver grafts certainly represent a major challenge because of the limited organ resources [94]. On the other hand, not offering a transplant to patients who have the potential to have good outcomes is ethically disturbing [95].

cm in size and globally for all patients with HCC compared to patients without HCC [93]. It seems obvious that individual benefit is contrary to global benefit in the HCC setting. By current modified HCC allocation systems, the requirements of liver grafts certainly represent a major challenge because of the limited organ resources [94]. On the other hand, not offering a transplant to patients who have the potential to have good outcomes is ethically disturbing [95].

Hepatitis C

The problem of an optimal D-R matching becomes fairly more complex in the HCV recipients setting. HCV re-infects the liver graft almost invariably following reperfusion [96] with histological patterns of acute HCV appearing between 4 and 12![]() weeks post-transplant and chronic HCV in 70–90% of recipients after 1

weeks post-transplant and chronic HCV in 70–90% of recipients after 1![]() year and in 90–95% after 5

year and in 90–95% after 5![]() years [97]. Recurrent HCV will lead 10–30% of recipients to progress to cirrhosis within 5

years [97]. Recurrent HCV will lead 10–30% of recipients to progress to cirrhosis within 5![]() years of transplantation with a rate of decompensation of >40% and >70% at 1, and 3

years of transplantation with a rate of decompensation of >40% and >70% at 1, and 3![]() years, respectively [98].

years, respectively [98].

Increased rates of re-transplantation and lower survival rates have been reported by most series in HCV recipients. Because of these results, the appropriateness of re-transplantation for HCV and the optimal timing of surgery in an era of organ shortage are under debate. In fact, it has been stated that re-transplantation is not an option for recurrent hepatitis C cirrhosis after LT unless performed on patients with late recurrence, stable renal function and with the possibility of antiviral treatment post-LT [99]. This scenario adds extreme complexity to the current development of matching systems in a subgroup of patients that is still the most common indication for LT in several countries. In this context, the question of which liver should be allocated to HCV recipients seems to be fairly difficult to answer, as several ECD factors as age [100], [101], steatosis, CIT [102] and I/R injury [103] have been reported to significantly increase viral recurrence and decrease patient and graft survival. Current evidence supports to tip the balance towards HCV recipients, as the impact of ECD factors may exponentially impact their outcome compared to non-HCV recipients [102]. However, this individual benefit of survival is based on the current standard of a minimum benefit criterion of a 5-year patient survival >50%. In the case that a global benefit of survival is to be considered, with increasing rates of post-transplant survival and more stringent critera, not only HCV, but also older and HCC recipients would significantly have reduced eligibility for liver transplantation [104].

The “ideal” D-R matching system

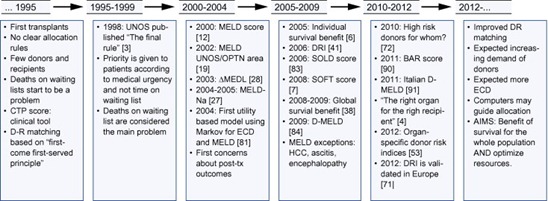

The ideal D-R matching system still remains a chimera mainly due to two factors: inconsistent evidence and a lack of reliable end points. While the concept of survival benefit is certainly very attractive, currently it is quite unrealistic to be used in real practice, given the difficulty of unbiased calculations. Calculation of the social benefit is obviously even more complex. However, although sickest-first principles are still prevailing everywhere and efforts towards optimization of isolated donor-recipient matching may be more practical and realistic, utilitarianism should be considered in nearly future. The main problem nowadays is that every model is performed by using simple statistical calculations that analyze the impact of individual variables in the context of multiple regression models. Unfortunately, this is not enough and calculations that may lead to life or death may not be under human-guided decisions. The most complex models of survival benefit have no more than 20 variables with a single end point. Interesting attempts to improve current simplistic evidence have been reported by using artificial intelligence that may compute hundreds of variables, combining their own contribution (even though not so strong) and achieving different end points [105]. Although still not prospective and not under the setting of a randomized multicenter trial, this tool could potentially make real-time analyses including several variables, giving an objective allocation system. Surely this concept will change allocation policies in the near future (Fig. 2).

Fig. 2. Chronology of donor-recipient matching in liver transplantation. As observed, prior to 1995, D-R matching was unclear. In the last 15–20 years, several changes have led to a change in the mentality of liver transplant teams, leading to more complex methods of allocation in order to obtain the best survival and the lowest rate of deaths on waiting list.

years, several changes have led to a change in the mentality of liver transplant teams, leading to more complex methods of allocation in order to obtain the best survival and the lowest rate of deaths on waiting list.

Antoine Augustin Cournot in his theory of oligopoly (1838) depicts how firms choose how much output to produce to maximize their own profit. However, the best output for one firm depends on the outputs of others. A Cournot-Nash equilibrium occurs when each firm’s output maximizes its profits given the output of the other firms [106]. Probably, the “ideal” D-R matching system may satisfy the global benefit to optimize individual benefits including both short- and long-term survival rates and fully assessing every single donor, recipient and surgical variable known prior to the transplantation procedure.

Conclusions

Donor-recipient matching is an amazing theoretical concept that nowadays, is more a myth than a reality. Every score currently available focuses on isolated or combined donor and recipient variables. Unfortunately, to date, these scores are not statistically robust enough. Scores for D-R matching in the future would have to be built up by more than a list of variables; they should consider the probability of death on waiting list, post-transplant survival, cost-effectiveness and global survival benefit. Only when everything is considered in a single method, transparency, justice, utility and equity may be achieved. Probably, the human mind may not be enough accurate to put in order so many interactions. Surely, the future of graft allocation will be guided by computational tools that might give objectivity to an action that should be more a reality than a myth.

No comments:

Post a Comment